Marta Molinas

Om

Project proposals for ITK 2025-2026 (Molinas)

All available projects are based on a new low-density EEG concept, FlexEEG, designed for EEG source imaging, signal analysis, and AI-based classification, as described below:

David vs. Goliath – FlexEEG: A novel approach to reduced-channel EEG systems with brain imaging capabilities.

This project is the foundation for all sub-projects offered by my team. It is a multidisciplinary project in collaboration with the Kavli Institute for Systems Neuroscience, the NTNU Developmental Neuroscience Laboratory, the Department of Computer Science, the Juntendo University, Japan, the RIKEN Center for Brain Science, Japan, the International Institute of Integrative Sleep Medicine (IIIS)-Human Sleep Lab of the University of Tsukuba, Japan, the Indian Institute of Technology Roorkee , Aalborg University and Aarhus University, Denmark.

All listed projects require two students.

Traditional EEG recordings use high-density wet electrodes (60+ channels) arranged in a regular scalp pattern, requiring gel for stable signals. This method is time-consuming, costly, and uncomfortable.

FlexEEG offers an alternative with reduced-channel, dry, wireless electrodes, demanding expertise in electronics, signal processing, inverse problem-solving, and system identification. Our team, including Master’s, PhD, and exchange students, is actively developing such solutions, leveraging backgrounds in electronics, AI, machine learning, and embedded technologies.

Below is an artistic representation of the FlexEEG concept.

We are currently developing an in-house EEG headset featuring flexible, wireless, dry electrodes designed to move across the scalp.

The primary supervisor for these projects is Marta Molinas (marta.molinas@ntnu.no).

List of Projects

1. TheAppleCatcher Game: A novel motor imagery BCI for hand rehabilitation

2. Why Some Thrive While Others Struggle: AI, Neuroimaging, and Neurofeedback for Mental Health and Resilience

3. From EEG electrodes in the scalp to images of the brain using AI.

4. FlexEEG in Human Sleep Research: within this project, 3 different topics are offered:

4.1 Rapid Eye Movement (REM) onset detection during REM sleep.

4.2 Automatic sleep stage classification based on minimally invasive EEG.

4.3 Dream_ID: Automatic dream emotion recognition during REM sleep based on minimally invasive EEG.

5. Mind Wandering and Brain Network Transitions in Meditation: Insights from FlexEEG

6. FlexEEG decoding of hand movement intention

7. FlexEEG headset prototype development: Within this project, 2 different topics are offered:

7.1 Design and control of a robotic system for EEG measurements

7.2 EEG signal quality analysis with moving electrodes

8. Design of an EEG based communication system for patients with Locked-in Syndrome.

9. Flying a Drone with your Mind: FlexEEG motor imagery

10. FlexEEG based BCI system for ADHD Neurofeedback

11. FlexEEG based Lie Detector

12. FlexEEG based Biometric System for Subject Identification

13.The Augmented Human: Developing a BCI for RGB based automation

14. FlexEEG based Alcohol Detector for drivers

15. Prediction of Epileptic seizures with AI integrated FlexEEG.

16. FlexEEG for BabyBCI

17. Combining EEG and motion capture in immersive VR environment

Description of Projects

1. The AppleCatcher Game: A novel motor imagery BCI for hand rehabilitation

This project will further develop a new BCI framework for studying cortical activation during imagined hand movements. The framework will serve as a foundation for integrating adaptive neuromodulation into post-stroke rehabilitation therapies.

An initial demonstration of this concept can be seen in the The AppleCatcher Game video, while the proof of concept developed by previous students is available at BCI Workshop 2025.

The existing codebase will be provided as a starting point for future development and improvements.

Main supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-supervisor: Amita Giri, amita.giri@ece.iitr.ac.in

2. Why Some Thrive While Others Struggle: AI, Neuroimaging, and Neurofeedback for Mental Health and Resilience

This project aims to uncover the behavioral and neurocognitive mechanisms underlying stress and resilience, exploring why some individuals develop mood disorders while others remain resilient. By integrating neuroimaging methods with machine learning, the project seeks to identify brain networks associated with emotional well-being and develop targeted interventions.

In addition to mapping resilience-related brain activity, this project will explore neurofeedback-based interventions as a potential tool for enhancing emotional regulation. Neurofeedback training, which enables individuals to modulate their brain activity in real-time, could provide a novel approach to strengthening resilience and preventing mental health disorders.

The student will collaborate with the Ziaei group at the Kavli Institute for Systems Neuroscience and will be involved in:

- Analyzing MRI data to determine which brain networks are linked to psychopathology in both healthy and depressed individuals.

- Measuring how the functional activity of large-scale networks contributes to emotional well-being.

- Developing explainable machine learning methods to predict mental disorders and identify key diagnostic regions.

- Exploring the potential for neurofeedback interventions to enhance resilience by training individuals to regulate their own brain activity.

This project offers a unique opportunity to combine cutting-edge neuroscience, AI, and real-world applications in mental health intervention.

This project will require two students, one from Cybernetics and one from the Kavli Institute to cooperate in this transdisciplinary task.

Main supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-supervisor: Prof. Maryam Ziaei, maryam.ziaei@ntnu.no, Kavli Institute for Systems Neuroscience

3. From EEG electrodes in the scalp to images of the brain using AI.

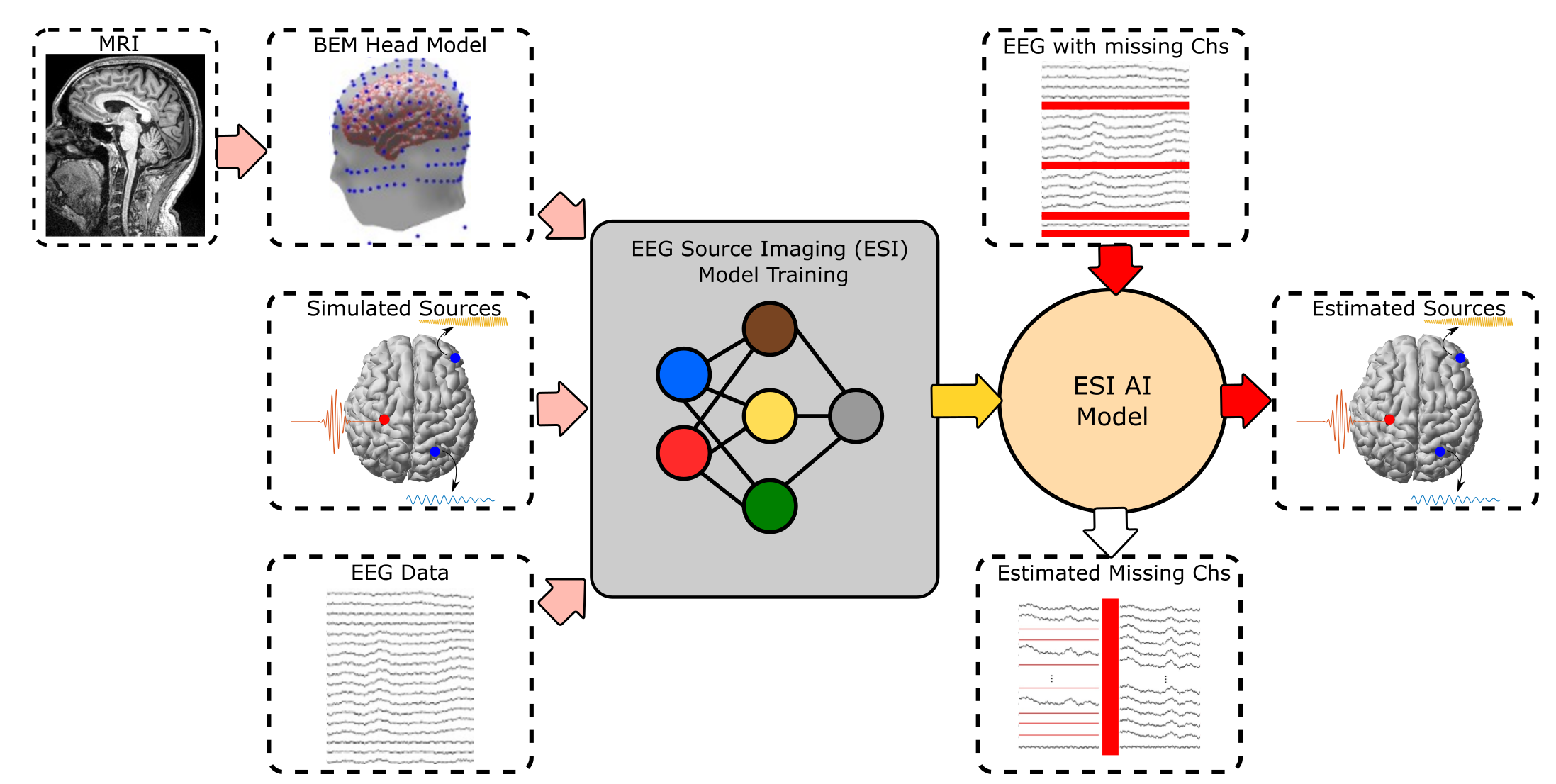

AI is revolutionizing many research fields including EEG neurophysiological measurements and signal analysis. In this project, the aim is to involve novel AI methods in an estimation process called EEG source imaging (ESI). ESI refers to the estimation of the source activity inside the brain by using the EEG signals registered at scalp and brain models based on anatomical measurements (e.g. MRI). The estimated source activity can provide important information like the location of the activity, the dispersion of the activity, the interaction of brain areas, among other properties. This information can be used to improve brain-computer interfaces performance, better understanding of the dynamics of the brain and relationships of brain areas, and localize the foci of epileptic activity.

This project seeks to explore the implementation of new ESI methods based on deep learning and advanced simulation methods for source activity. The students in this project will perform advanced simulation of brain activity and explore/create cutting-edge models to compare their performance using a simulated ground-truth. Finally, evaluations using real datasets will be performed to score the developed deep learning-based ESI methods.

This project will require two students, it will be in collaboration with the Indian Institute of Technology Roorkee, India

Supervisor at NTNU: Marta Molinas, marta.molinas@ntnu.no

Supervisor at IIT Roorkee: Dr Amita Giri

4. FlexEEG in Human Sleep Research: within this project, 3 different topics are offered:

4.1 Rapid Eye Movement (REM) onset detection during REM sleep.

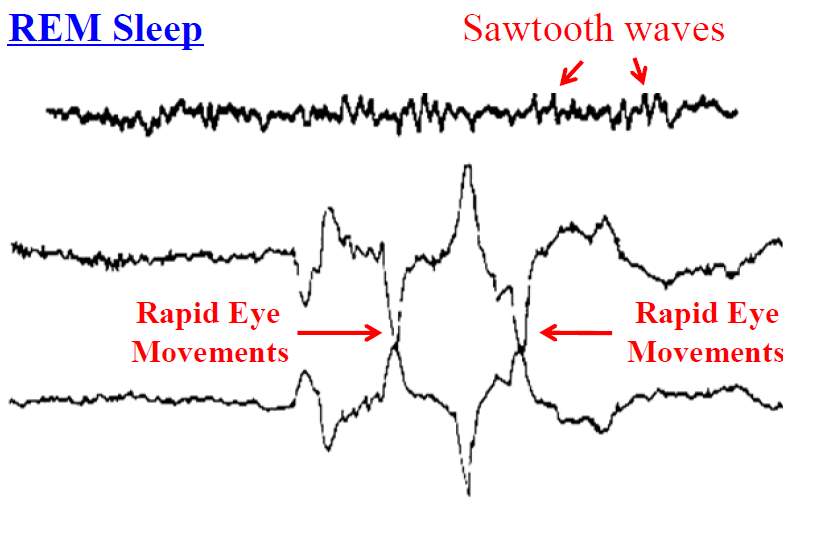

REM and NREM sleep studies have revealed much about the physiology of sleep and its disorders and about the pathophysiology of mental illness. Electrooculography (EOG) is used in Polysomnography (PSG) studies to capture the electrical activity generated by the human eye, which could be regarded to behave like an electric dipole, having the positive and negative poles at the cornea and retina respectively. Phasic REM sleep parameters include the number and incidence of rapid eye movements. This project will use EOG signals from PSG studies to detect the exact number and timing of rapid eye movements during REM sleep. The algorithm will be implemented by modelling the peak-gradient relationship and duration of the movements in EOG signals starting with the parameters proposed in [1]. In general, human physiological signals are qualitatively similar but not quantitatively identical. For example, a sawtooth wave (STW) is one of the characteristic EEG patterns of REM sleep. However, the density, duration, and frequency of STWs are individually dependent. The algorithm will focus on solving the problem of interpersonal EOG variability in order to make it more sensitive to these differences.

This project will be in collaboration with the IIIS Human Sleep Lab of the University of Tsukuba, from where the sleep data will be obtained. The EEG ITK team is currently collaborating with the Human Sleep lab in several other projects related to sleep and sleep data is already available to initiate the project. The student in this topic will collaborate with the student in Topic 3.

[1] Kazumi Takahashi and Yoshikata Atsumi, Sleep and Sleep States, Precise Measurement of Individual Rapid Eye Movements REM Sleep of Humans. Sleep, 20(9):743-752, 1997 American Sleep Disorders Association and Sleep Research Society

A basic software developed by a previous student will serve as starting point for this project.

Main supervisor: Marta Molinas, marta.molinas@ntnu.no

Contact person in Japan: Takashi Abe, IIIS- University of Tsukuba

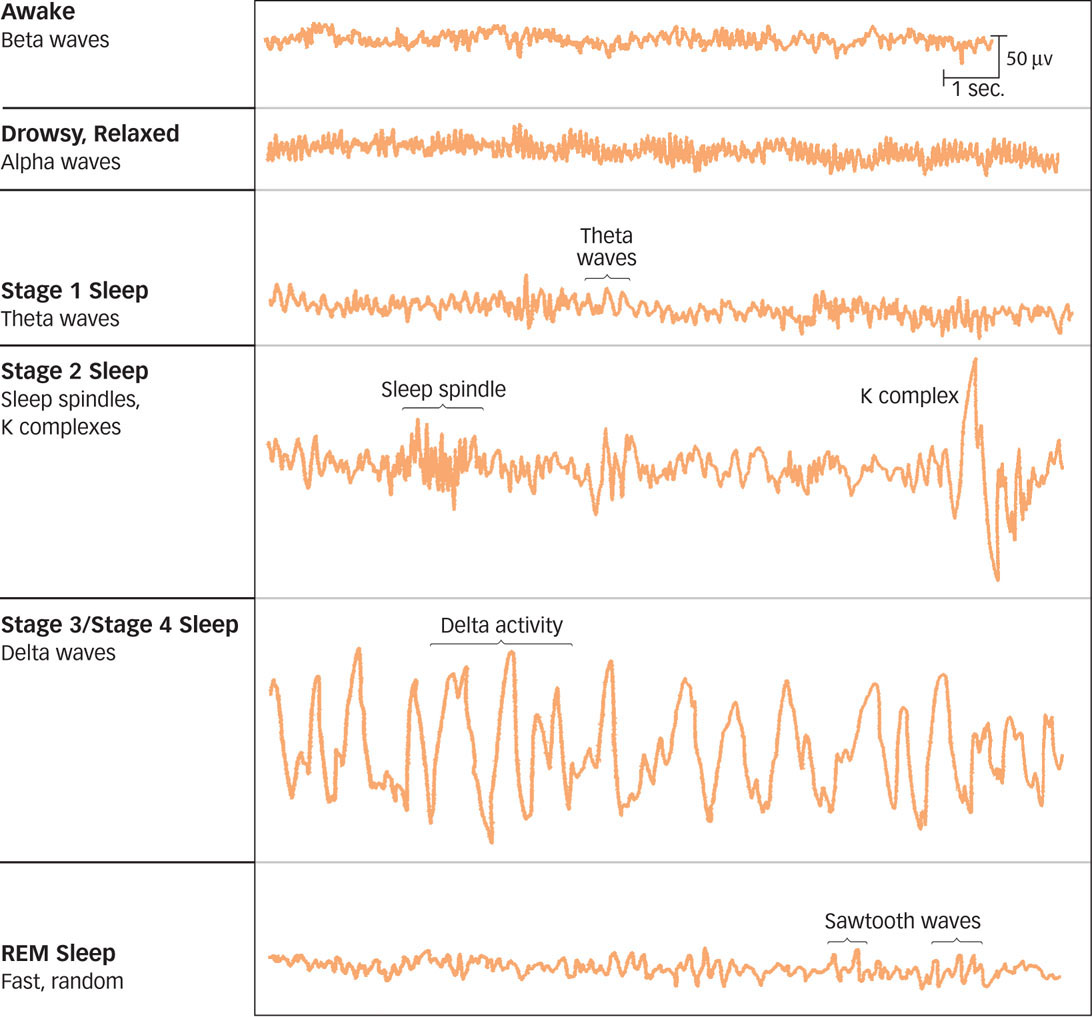

4.2 Automatic sleep stage classification based on minimally invasive EEG.

Sleep staging is a process typically performed by sleep experts and is a tedious and lengthy task, during which labels are manually assigned to polysomnographic (PSG) recording epochs. In order to reduce the time and effort required to accomplish this, a large number of automatic sleep staging methodologies have been developed. This is typically achieved through a series of steps which in general include: signal pre-processing, feature extraction and classification. The methods used for each of these steps vary greatly among the proposed approaches, with the final result providing varying degrees of accuracy. In this project, the students will develop a pipeline for sleep staging using data obtained from the IIIS Human Sleep Lab of the University of Tsukuba.

The project will be focused on the use of a single type of signal (EEG) and reduced electrode count, as these can potentially provide minimally invasive sleep monitoring solutions. The sleep stage classification will be conducted following widely accepted rules i.e., American Academy of Sleep Medicine (AASM) and Rechtschaffen & Kales (R&K) rules.

This project will be in collaboration with the IIIS Human Sleep Lab of the University of Tsukuba, from where the sleep data will be obtained. The EEG ITK team is currently collaborating with the Human Sleep lab in several other projects related to sleep and sleep data is already available to initiate the project.

A basic software developed by a previous student will serve as starting point for this project.

Main supervisor: Marta Molinas

Co-supervisor: Dr Luis Alfredo Moctezuma, luis.a.moctezuma@gmail.com

Contact person in Japan: Prof. Takashi Abe, IIIS- University of Tsukuba

4.3 DreamID: Automatic dream emotion recognition during REM sleep based on minimally invasive EEG.

Every day, our emotional states influence our behavior, decisions, relationships and health condition. Affective states play an essential role in decision-making, they can facilitate or hinder problem-solving. Emotion self-awareness can help people manage their mental health and optimize their work performance. Automatic detection of emotion dimensions can increase our understanding of emotions and promote effective communication among individuals and human-to-machine information exchanges. Besides, automatic emotion recognition will play an essential role in emotion monitoring in health-care facilities (The WHO estimates that depression, as an emotional disorder, will soon be the second leading cause of the global burden of disease), gaming and entertainment, teaching-learning scenarios, optimizing performance in the workplace, and in artificial intelligence entities designed for human interaction.

To decode EEG signals and relate them to specific emotion is a complex problem. Affective states do not have a simple mapping with specific brain structures because different emotions activate the same brain locations, or conversely, a single emotion can activate several brain structures. A neural model of human emotions would be beneficial for building an emotion recognition system and developing applications in emotion understanding and management.

The objective of this project is to develop neural models that can decode human emotions through EEG signal analysis by learning from training data from experiments designed for emotion elicitation. The process will involve signal analysis, pre-processing, feature extraction and selection, design of classification algorithms and performance evaluation.

This project will be in collaboration with the International Institute of Integrative Sleep Medicine of the University of Tsukuba (Japan).

Main Supervisors: Marta Molinas

Co-supervisors: Dr Luis Alfredo Moctezuma, luis.a.moctezuma@gmail.com

Contact person in Japan: Prof. Takashi Abe, IIIS, University of Tsukuba

5. Mind Wandering and Brain Network Transitions in Meditation: Insights from FlexEEG

Mindfulness, a form of meditation defined by present moment awareness, has shown to be effective in treating various mental disorders with remarkably modest hours of practice (ca. 30 hours). A possible neural mechanism by which mindfulness training improves mental health is the altered activity of a brain network called default mode network (DMN). DMN activity is associated with task-irrelevant thoughts and is enhanced in some mental disorders, whereas suppression during meditation and resting is reported in meditation experts compared to novice. In this project, we aim at replicating the findings of an fMRI study of meditation in which DMN is activated and deactivated during different phases of mindfulness meditation [R1]. If we can detect forgoing transitions in DMN activity using FlexEEG, it would become possible to design a device that feedbacks DMN activation to the meditator. Such flexible and user-centric EEG devices will facilitate the general public's well-being and advance research by enabling large-scale studies to provide evidence across populations. Such evidence will provide a rational basis for designing meditation-based interventions to improve cognitive control and emotional inhibition through meditation.

[R1] Hasenkamp, W., Wilson-Mendenhall, C. D., Duncan, E., & Barsalou, L. W. (2012). Mind wandering and attention during focused meditation: a fine-grained temporal analysis of fluctuating cognitive states. Neuroimage, 59(1), 750-760.

This project will require two students, it will be in collaboration with the Indian Institute of Technology Roorkee and Juntendo University, Japan.

Main Supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-Supervisors: prof. Amita Giri (IIT Roorkee), Dr Sun Zhe, zhe.sun.vk@riken.jp

6. FlexEEG decoding of hand movement intention:

Multiple movements like opening or closing the hand, grasping, or showing the palm can be decoded from the EEG signals recorded while attempting to do those movements. The decodified movements can serve for multiple purposes. For example, in neurorehabilitation they can be used to provide feedback to a patient that is performing therapy to recover hand movements after stroke, and in brain-computer-interfaces to generate outputs that control an external device.

The objective of this project consists of decoding movement intentions by combining low-density EEG and source reconstruction (estimation of the activity inside the brain from the electrodes at scalp). The project involves recording EEG signals for multiple participants, analyze and build offline/online classifiers using state-of-the-art machine/deep learning algorithms.

This project will provide a foundation to develop wearable solution based on few electrodes that can be later use in neurorehabilitation therapies.

Main Supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-supervisor: Amita Giri, (IIT Roorkee)

7. FlexEEG headset prototype development: Within this project, 2 different topics will be offered:

7.1 Design and control of a robotic system for EEG measurements

The purpose of this project is to design a robotic system for the EEG electrodes. The prototype will be realized with a 3D printer.

7.2 EEG signal quality analysis of the new prototype

In this project the objective is to analyze the EEG signal and compare the quality of the measurements with standard EEG equipment.

Supervisor: Marta Molinas, marta.molinas@ntnu.no

8. Design of an EEG based communication system for patients with Locked-in Syndrome.

Locked-in syndrome (LIS) is a state of complete paralysis except for ocular movements in a conscious individual, normally resulting from brainstem lesions. These are patients who are conscious and aware of their environment but are physically disabled. There are some available solutions nowadays to help them communicate but the downside is the requirement for physical training which can be both time and money consuming. The main objective of this project is to help these patients communicate and engage more effectively in their daily life by using electroencephalogram (EEG)-based communication system to facilitate communication of these patients with their caretakers.

The project design will include hardware and software. The software will be a client-server architecture with the necessary preprocessing and classification algorithms for EEG signal processing. The server will include endpoints to manage eye movements classification, primary colours exposure, and mental imagery tasks (motor imagery). The classification models and the EEG data will be stored in a database.

The hardware-/client-side will use the OpenBCI EEG headset and a Raspberry Pi to show the possible options, collect the EEG data, and send it to the server. The OpenBCI will continuously read the patients’ scalps brainwaves while the Raspberry pi in front of them displays six basic needs, namely, food, water, washroom, help, sleep and entrainment.

The system (client-server) will act as a two-way communication link between patients and their caregiver, who will receive a notification via SMS or a basic Android application installed on the caregivers' phones.

Once the system is implemented and tested, the second phase of the project will consist on reducing the necessary EEG channels and thus reduce the headset and increase the portability.

The project will start using code already developed by previous students (available on GitHub). It is suitable for two students who can work in collaboration.

This project will require two students, there is an ongoing student that developed a software platform that will serve as starting point.

Supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-supervisor: Prof. Naveed Rehman, naveed.rehman@ece.au.dk

9. Flying a Drone with your Mind: FlexEEG motor imagery

This project is about an experimentation on actuation of unmanned vehicles directly with signals from the brain. This will be done through the use of an open source Electroencephalography (EEG) headset that records brain activity and translates it into commands for actuation of devices in real-time.

The task will consist on developing a Brain Computer Interface (BCI); that can directly give flying/landing commands from the brain to the drone. The Open BCI EEG headset will be used (http://www.openbci.com/) for recording the brain signals to be processed into commands that will be sent wirelessly to actuate the drone. Motor imagery will be used as command to fly the drone. Using this command, this project will fly a drone and develop a trajectory control directly from the motor imagery commands without using any manual actuation system in between.

The students in this project will have access to the open-source softwares, EEG headset and BCI already developed within this task by previous year students.

This project is suitable for two students to work in a team.

A basic software developed by a previous student will serve as starting point for this project.

Supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-supervisor: Dr Luis Alfredo Moctezuma, luis.a.moctezuma@gmail.com

10. FlexEEG based BCI system for ADHD Neurofeedback

Applying machine learning, brain mapping and EEG recording techniques with an open source Brain-Computer-Interface (BCI) system, the master student will work in the development of a system for classifying the brain activity in imaginary motor tasks, with the objective of navigating in a 3D virtual maze using the brain of the user for treatment of Attention-Deficit Hyperactivity Disorder (ADHD).

This project is in collaboration with Juntendo University, Japan. The NTNU student in this project has the option to write the Project or the Master Theis in Japan.

Supervisor: Marta Molinas, marta.molinas@ntnu.no

11. FlexEEG based Lie Detector

The aim of this project is to identify when a user is providing deceptive information using EEG signals. The master student will work in the development of a lie detection system, based on the analysis of EEG measuments, where he/she would combine knowledge of Event-Related Potentials, Signal Processing, and Machine Learning using an open source Brain-Computer-Interface (BCI) system.

Supervisor: Marta Molinas,marta.molinas@ntnu.no

12. FlexEEG based Biometric System for Subject Identification

The aim of this project is to implement a real-life Subject Identification System in real-time using brain signals collected from 100 recruited volunteers in Norway. The first prototype is already developed and will be the starting point for this larger implementation. The system consist of a client-server architecture using python and Django. The server side consist on feature extraction and machine learning techniques to add new persons to the system and save it in a database. The final implementation will be tested in a real environment, and it will be able to detect persons already in the system and reject the intruders.

A basic software developed by a previous student will serve as starting point for this project.

Supervisor: Marta Molinas, mailto:marta.molinas@ntnu.no

Co-supervisor: Dr Luis Alfredo Moctezuma, luis.a.moctezuma@gmail.com

13. The Augmented Human: Developing a BCI for RGB-Based Automation

This project explores the potential of brain-computer interfaces (BCIs) to control devices using EEG responses to primary color exposure (RGB: red, green and blue colors), moving beyond traditional paradigms that rely on external flickering stimuli like SSVEP and P300.

Students will work on two key phases:

🔹 Offline Analysis – Utilize existing EEG data collected by previous students, applying artifact removal, signal processing, and machine learning techniques to refine RGB color classification.

🔹 Online Implementation – Develop a real-time framework to control automated devices (e.g., an automated door or smart home appliances) based on EEG responses.

This hands-on project involves signal processing, machine learning, and real-time system development. Ideal for two students working collaboratively, it offers the chance to push the boundaries of non-invasive neural control systems.

The project is in collaboration with the Department of Electrical and Computer Engineering of Aarhus University, Denmark, with prof. Naveed Rehman.

A basic software developed by a previous student will serve as starting point for this project.

Main Supervisor: Marta Molinas, mailto:marta.molinas@ntnu.no

Co-supervisor: Prof. Naveed Rehman, naveed.rehman@ece.au.dk

14. FlexEEG: EEG-based Alcohol Detector

The measurement of alcohol in the human body is a public health's relevant task for avoiding accidents and deaths. Currently, we can do that through devices that analyse the alcohol content in breath (Breathanalysers). However, it is not clear if a machine learning algorithm could help to get a better estimation of the effect of booze by directly using EEG signals. Previous works have only approached the automatic distinction (under a machine learning approach) between EEG signals from alcoholic vs non-alcoholic subjects with relatively good outcomes.This project aims to assess the feasibility of using EEG signals for detecting when a person has drunk alcohol, i.e, an initial design towards an EEG-based alcohol detector (Alcotest device). The research in this project will imply the design of an EEG recording protocol from several subjects during and after the administration of alcohol and the main focus will be on assessing if a machine learning algorithm is able to effectively distinguish between the two states. Furthermore, it will involve the design of a scheme with the following stages: signal preprocessing, artifact removal, feature extraction, and classification.

This project is in collaboration with Juntendo University, Japan.

Supervisors: Marta Molinas, marta.molinas@ntnu.no, Prof. Zhe Sun, Juntendo Univeristy, Japan.

15. Prediction of Epileptic seizures with AI integrated FlexEEG.

Seizure warning devices using predictive algorithms could greatly improve quality of life by detecting the preictal phase—the transitional period before a seizure. Traditionally, seizure prediction relies on detecting this phase and triggering an alarm. However, recent research advocates for seizure forecasting, which provides continuous risk assessment rather than a binary alarm system. This project will compare prediction and forecasting methods using publicly available datasets (e.g., EPILEPSIAE database).

The starting point will be this study: Forcasting approach to epilepsy prediction

Main supervisor: Marta Molinas

Co-supervisor: Dr Luis Alfredo Moctezuma, luis.a.moctezuma@gmail.com

16. FlexEEG for BabyBCI

This project will develop the foundation for using EEG neuroimaging for real-time BCI classification on infant EEG data. Source-space features have been previously used in our work with Motor Imagery (Soler et al., 2024) and have shown incredible potential for classification improvement as compared to the more widely-used sensor-space features. To be able to extract good source-space features, especially with 128-electrode HD-EEG used in the data we have, it is essential to work with optimization techniques, and we suggest the following task:

- Model-Based Electrode Position Optimization for BCI Design: To develop the BabyBCI, the number of EEG electrodes needs to be minimized to ensure ease of use and enable real-time computation. The process will begin with high-density EEG recordings and an average infant brain model (Almli et al., 2007; Sanchez et al., 2012) successfully used in research for source space analyses which has identified brain regions activated during visual motion perception in both full-term and premature infants (Nenseth, S.-A. et al., under review). .Based on the HD-EEG recordings and the domain knowledge that gives us the brain regions of interest, we will employ optimization techniques (based on our previous work with NSGA optimization) (Soler et al., 2022) to determine the key EEG electrodes required for identifying brain activity related to the visual motion perception paradigms in babies. Although NSGA-optimization has proven fruitful in source localization, we will use multiple optimization techniques as part of the research.

This project will be in collaboration with the Developmental Neuroscience Laboratory of NTNU.

Main supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-Supervisor: Audrey Van Der Meer. audrey.meer@ntnu.no

17. Combining EEG and motion capture in immersive VR environment

Electroencephalography (EEG) is an electrophysiological monitoring method to measure electrical activity in the brain. From noninvasive small electrodes that are placed along the scalp, EEG record spontaneous electrical activity in our brain. Analyzing EEG signal data helps researchers to understand the cognitive process such as human emotions, perceptions, attentions and various behavioral processes.

Optical motion capture systems allow precise recordings of participants’ motor behavior inside small or larger laboratories including information on absolute position. Movement tracking is useful in, for example, rehabilitation settings where tracking a person’s movements during training can give valuable information to provide feedback on whether an exercise is performed correctly or not. Traditional systems often use specialized sensors (Kinect, accelerometers, marker-based motion tracking) and are therefore limited in their area of application and usability. With advances in Machine Learning for Human Pose Estimation (HPE), movement tracking has become a viable alternative for motion tracking.

Combining HPE-based movement tracking and EEG can provide the patient with more holistic feedback and help with progress in rehabilitation.

In this study, the students will combine EEG and HPE-based movement tracking, for an existing VR exergame. The EEG can be used to give the patient additional feedback on, for example, her/his attention level, movement intention, or cognitive load.

For comprehensive analysis of bio-signals tracked by various sensors, movement and brain data need to be time-synchronized with VR contents. The pipelines that could be used are https://www.neuropype.io/ or https://timeflux.io/ based on LabStreamingLayer. They should work with our EEG, Unity out of the box, however, are not yet synchronized with HPE.

The study is associated with Vizlab and the needed sensors and the basic training on how to use them (VR headset, EEG equipment) are provided.

This project is in collaboration with the Department of Computer Science, there will be two students working together, one from the Department of Computer Science and one from Cybernetics department.

Main-supervisor: Marta Molinas, marta.molinas@ntnu.no

Co-Supervisor: Xiaomeng Su, xiaomeng.su@ntnu.no, Department of Computer Science

Publikasjoner

2025

-

Shah, Chirag Ramgopal;

Cabrera, Maria Marta Molinas;

Bana, Prabhat Ranjan;

Føyen, Sjur;

Nilsen, Roy.

(2025)

Unveiling Stability Insights of Model Predictive Current Control in Voltage Source Converter through Impedance Analysis.

International Conference on Clean Electrical Power

Sammendrag/Abstract

-

Ruan, Hao;

Xiao, Yi;

Luo, Hao;

Yang, Yongheng;

Cabrera, Maria Marta Molinas;

Luo, Haoze.

(2025)

Optimized Parameter Design of Grid-Following and Grid-Forming Converters for Wide Operating Region.

IEEE Journal of Emerging and Selected Topics in Power Electronics

Vitenskapelig artikkel

-

Shah, Chirag Ramgopal;

Cabrera, Maria Marta Molinas;

Føyen, Sjur;

D'Arco, Salvatore;

Nilsen, Roy;

Amin, Mohammad.

(2025)

Single Coordinate Bode Plots for Stability Evaluation of MIMO Power Electronics Systems via a Frequency Coupling Corrective Factor.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Gao, Lei;

Lyu, Jing;

Zong, Xin;

Cai, Xu;

Cabrera, Maria Marta Molinas.

(2025)

Online Oscillatory Stability Assessment of Renewable Energy Integrated Systems Based on Data-Driven and Knowledge-Driven Method.

IEEE Transactions on Power Delivery

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas;

Abe, Takashi.

(2025)

Unlocking Dreams and Dreamless Sleep: Machine Learning Classification With Optimal EEG Channels.

BioMed Research International

Vitenskapelig artikkel

-

Yang, Ling;

Luo, Jiahao;

Liao, Junhao;

Wen, Xutao;

Yuan, Chongyao;

Wang, Yu.

(2025)

A fast SOC balancing control strategy for distributed energy storage system based on sinusoidal signal injection.

International Journal of Electrical Power & Energy Systems

Vitenskapelig artikkel

2024

-

Teshome, Abeba Debru;

Kahsay, Mulu Bayray;

Cabrera, Maria Marta Molinas.

(2024)

Assessment of power curve performance of wind turbines in Adama-II Wind Farm.

Energy Reports

Vitenskapelig artikkel

-

Soler, Andres;

Giraldo, Eduardo;

Cabrera, Maria Marta Molinas.

(2024)

EEG source imaging of hand movement-related areas: an evaluation of the reconstruction and classification accuracy with optimized channels.

Brain Informatics

Vitenskapelig artikkel

-

Sletta, Øystein Stavnes;

Cheema, Amandeep;

Marthinsen, Anne Joo Yun;

Andreassen, Ida Marie;

Sletten, Christian Moe;

Galtung, Ivar Tesdal.

(2024)

Newly identified Phonocardiography frequency bands for psychological stress detection with Deep Wavelet Scattering Network.

Computers in Biology and Medicine

Vitenskapelig artikkel

-

Aljalal, Majid;

Cabrera, Maria Marta Molinas;

Aldosari, Saeed A.;

AlSharabi, Khalil;

Abdurraqeeb, Akram M.;

Alturki, Fahd A..

(2024)

Mild cognitive impairment detection with optimally selected EEG channels based on variational mode decomposition and supervised machine learning.

Biomedical Signal Processing and Control

Vitenskapelig artikkel

-

Shah, Chirag Ramgopal;

Føyen, Sjur;

Cabrera, Maria Marta Molinas;

Nilsen, Roy;

Amin, Mohammad.

(2024)

Influence of controller and reference frame on impedance coupling strength, its quantification and application to stability studies.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Shah, Chirag Ramgopal;

Cabrera, Maria Marta Molinas;

Nilsen, Roy;

Amin, Mohammad.

(2024)

Impedance Reshaping of GFM Converters with Selective Resistive Behaviour for Small-signal Stability Enhancement.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Urdahl, Paal Scheline;

Omsland, Vegard;

Løkken, Sandra Garder;

Dokken, Mari Hestetun;

Guevara, Andres Felipe Soler;

Cabrera, Maria Marta Molinas.

(2024)

Impact of Ocular Artifact Removal on EEG-Based Color Classification for Locked-In Syndrome BCI Communication.

Communications in Computer and Information Science (CCIS)

Vitenskapelig artikkel

-

Vassbotn, Molly;

Guevara, Andres Felipe Soler;

Cabrera, Maria Marta Molinas;

Nordstrøm-Hauge, Iselin Johanna.

(2024)

EEG-Based Alcohol Detection System for Driver Monitoring.

International Journal of Psychological Research

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Suzuki, Yoko;

Furuki, Junya;

Cabrera, Maria Marta Molinas;

Abe, Takashi.

(2024)

GRU-powered sleep stage classification with permutation-based EEG channel selection.

Scientific Reports

Vitenskapelig artikkel

-

Tian, Bing;

Li, Yingzhen;

Hu, Jiasongyu;

Wang, Gaolin;

Cabrera, Maria Marta Molinas;

Zhang, Guoqiang.

(2024)

A wide speed range sensorless control for three-phase PMSMs based on a high-dynamic back-EMF observer.

IEEE Transactions on Transportation Electrification

Vitenskapelig artikkel

-

Hernández-Ramírez, Julio;

Segundo-Ramírez, Juan;

Cabrera, Maria Marta Molinas.

(2024)

Comprehensive DQ impedance modeling of AC power-electronics-based power systems with frequency-dependent transmission lines.

Electric power systems research

Vitenskapelig artikkel

-

Ma, Ke;

Liu, Jinjun;

Cabrera, Maria Marta Molinas.

(2024)

Guest Editorial: Special Issue on Design and Validation Methodologies for Power Electronics Components and Systems.

IEEE Journal of Emerging and Selected Topics in Power Electronics

Vitenskapelig oversiktsartikkel/review

-

Chang, Liuchen;

Mazumder, Sudip K.;

Cabrera, Maria Marta Molinas.

(2024)

Guest Editorial: Special Issue on Power Electronics for Distributed Energy Resources.

IEEE Journal of Emerging and Selected Topics in Power Electronics

Vitenskapelig oversiktsartikkel/review

-

Zhang, Yu;

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Cai, Xu.

(2024)

Control of Virtual Synchronous Generator with Improved Transient Angle Stability under Symmetric and Asymmetric Short Circuit Fault.

IEEE transactions on energy conversion

Vitenskapelig artikkel

-

Khodaparast, Jalal;

Fosso, Olav Bjarte;

Cabrera, Maria Marta Molinas;

Suul, Jon Are Wold.

(2024)

Power system instability prediction from the solution pattern of differential Riccati equations.

The Journal of Engineering

Vitenskapelig artikkel

-

Føyen, Sjur;

Shah, Chirag Ramgopal;

Zhang, Chen;

Cabrera, Maria Marta Molinas.

(2024)

Synchronisation, dispatch and droop of VSCs: revisiting functionality in various coordinate systems.

IEEE International Symposium on Power Electronics for Distributed Generation Systems (PEDG)

Vitenskapelig artikkel

-

Aljalal, Majid;

Aldosari, Saeed A.;

Cabrera, Maria Marta Molinas;

Alturki, Fahd A..

(2024)

Correction to: Selecting EEG channels and features using multi-objective optimization for accurate MCI detection: validation using leave-one-subject-out strategy (Scientific Reports, (2024), 14, 1, (12483), 10.1038/s41598-024-63180-y).

Scientific Reports

Errata

-

Aljalal, Majid;

Aldosari, Saeed A.;

Cabrera, Maria Marta Molinas;

Alturki, Fahd A..

(2024)

Selecting EEG channels and features using multi-objective optimization for accurate MCI detection: validation using leave-one-subject-out strategy.

Scientific Reports

Vitenskapelig artikkel

2023

-

Føyen, Sjur;

Zhang, Chen;

Zhang, Yu;

Isobe, Takanori;

Fosso, Olav Bjarte;

Cai, Xu.

(2023)

Frequency Scanning of Weakly Damped Single-Phase VSCs With Chirp Error Control.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Bana, Prabhat Ranjan;

Amin, Mohammad;

Cabrera, Maria Marta Molinas.

(2023)

ANN-Based Surrogate PI and MPC Controllers for Grid-Connected VSC System: Small-Signal Analysis and Comparative Evaluation.

IEEE Journal of Emerging and Selected Topics in Power Electronics

Vitenskapelig artikkel

-

Fløtaker, Simen Piene;

Soler, Andres;

Cabrera, Maria Marta Molinas.

(2023)

Primary color decoding using deep learning on source reconstructed EEG signal responses.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Marthinsen, Anne Joo Yun;

Galtung, Ivar Tesdal;

Cheema, Amandeep;

Sletten, Christian Moe;

Andreassen, Ida Marie;

Sletta, Øystein Stavnes.

(2023)

Psychological stress detection with optimally selected EEG channel using Machine Learning techniques.

CEUR Workshop Proceedings

Vitenskapelig artikkel

-

Shah, Chirag Ramgopal;

Cabrera, Maria Marta Molinas;

Nilsen, Roy;

Amin, Mohammad.

(2023)

Limitations in Impedance Reshaping of Grid Forming Converters for Instability Prevention.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Soler, Andres;

Giraldo, Eduardo;

Cabrera, Maria Marta Molinas.

(2023)

EEG source imaging of hand movement-related areas: An evaluation of the reconstruction accuracy with optimized channels.

Lecture Notes in Computer Science (LNCS)

Vitenskapelig artikkel

-

Park, Daeseong;

Zadeh, Mehdi;

Cabrera, Maria Marta Molinas.

(2023)

Model-Based Design of Marine Hybrid Power Systems - With a case study of DC Grid Architecture and Control.

Norges teknisk-naturvitenskapelige universitet

Norges teknisk-naturvitenskapelige universitet

Doktorgradsavhandling

-

Yang, Ling;

Huang, Zehang;

Chen, Jinghua;

Luo, Jianqiang;

Wang, Yu;

Cabrera, Maria Marta Molinas.

(2023)

Stability Analysis and Interaction-Rule-Based Optimization of Multisource and Multiload DC Microgrid.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Khodaparast, Jalal;

Fosso, Olav Bjarte;

Cabrera, Maria Marta Molinas.

(2023)

Recursive Multi-Channel Prony for PMU.

IEEE Transactions on Power Delivery

Vitenskapelig artikkel

-

Zhang, Chen;

Zong, Haoxiang;

Cai, Xu;

Cabrera, Maria Marta Molinas.

(2023)

On the Relation of Nodal Admittance- and Loop Gain-Model Based Frequency-Domain Modal Methods for Converters-Dominated Systems.

IEEE Transactions on Power Systems

Vitenskapelig artikkel

-

Zhang, Yu;

Zhang, Chen;

Yang, Renxing;

Cabrera, Maria Marta Molinas;

Cai, Xu.

(2023)

Current-Constrained Power-Angle Characterization Method for Transient Stability Analysis of Grid-Forming Voltage Source Converters.

IEEE transactions on energy conversion

Vitenskapelig artikkel

-

Tian, Bing;

Lu, Runze;

Wei, Jiadan;

Cabrera, Maria Marta Molinas;

Wang, Kai;

Zhang, Zhuoran.

(2023)

Neutral Voltage Modeling and Handling Approach for Five-Phase PMSMs Under Two-Phase Insulation Fault-Tolerant Control.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Ohuchi, Kazuki;

Hirase, Yuko;

Cabrera, Maria Marta Molinas.

(2023)

MIMO and SISO Impedance-based Stability Assessment of a Distributed Power Source Dominated System.

IEEJ Journal of Industry Applications

Vitenskapelig artikkel

-

Li, Jing;

Wei, Yingdong;

Li, Xiaoqian;

Lu, Chao;

Guo, Xu;

Lin, Yunzhi.

(2023)

Modeling and stability prediction for the static-power-converters interfaced flexible AC traction power supply system with power sharing scheme.

International Journal of Electrical Power & Energy Systems

Vitenskapelig artikkel

2022

-

Khodaparast, Jalal;

Fosso, Olav B;

Cabrera, Maria Marta Molinas;

Suul, Jon Are Wold.

(2022)

Static and dynamic eigenvalues in unified stability studies.

IET Generation, Transmission & Distribution

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Abe, Takashi;

Cabrera, Maria Marta Molinas.

(2022)

Two-dimensional CNN-based distinction of human emotions from EEG channels selected by Multi-Objective evolutionary algorithm.

Scientific Reports

Vitenskapelig artikkel

-

Tian, Bing;

Wei, Jiadan;

Cabrera, Maria Marta Molinas;

Lu, Runze;

An, Quntao;

Zhou, Bo.

(2022)

Neutral Voltage Modeling and Its Remediation for Five-Phase PMSMs under Single-Phase Short-Circuit Fault Tolerant Control.

IEEE Transactions on Transportation Electrification

Vitenskapelig artikkel

-

Aljalal, Majid;

Aldosari, Saeed A.;

Cabrera, Maria Marta Molinas;

AlSharabi, Khalil;

Alturki, Fahd A..

(2022)

Detection of Parkinson’s disease from EEG signals using discrete wavelet transform, different entropy measures, and machine learning techniques.

Scientific Reports

Vitenskapelig artikkel

-

Føyen, Sjur;

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Fosso, Olav B;

Isobe, Takanori.

(2022)

Impedance scanning with chirps for single-phase converters.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Zong, Haoxiang;

Zhang, Chen;

Cai, Xu;

Cabrera, Maria Marta Molinas.

(2022)

Analysis of Power Electronics-Dominated Hybrid

AC/DC Grid for Data-Driven Oscillation Diagnosis.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Zhang, Yu;

Føyen, Sjur;

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Fosso, Olav B;

Cai, Xu.

(2022)

Instability Mode Recognition of Grid-Tied Voltage Source Converters with Nonstationary Signal Analysis.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Ohuchi, Kazuki;

Hirase, Yuko;

Cabrera, Maria Marta Molinas.

(2022)

Impedance-Based Stability Analysis of Systems with

the Dominant Presence of Distributed Power Sources.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Cabrera, Maria Marta Molinas.

(2022)

Proceedings of the 2022 International Power Electronics Conference (IPEC-Himeji 2022 - ECCE Asia).

IEEE (Institute of Electrical and Electronics Engineers)

IEEE (Institute of Electrical and Electronics Engineers)

Vitenskapelig antologi/Konferanseserie

-

Zong, Haoxiang;

Zhang, Chen;

Cai, Xu;

Cabrera, Maria Marta Molinas.

(2022)

Oscillation Propagation Analysis of Hybrid AC/DC Grids with High Penetration Renewables.

IEEE Transactions on Power Systems

Vitenskapelig artikkel

-

Soler, Andres;

Moctezuma, Luis Alfredo;

Giraldo, Eduardo;

Molinas, Marta.

(2022)

Automated methodology for optimal selection of minimum electrode subsets for accurate EEG source estimation based on Genetic Algorithm optimization.

Scientific Reports

Vitenskapelig artikkel

-

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Føyen, Sjur;

Suul, Jon Are Wold;

Isobe, Takanori.

(2022)

Impedance Modeling and Comparative Analysis of

a Single-phase Grid-tied VSC Under Average and

Peak dc Voltage Controls.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Khodaparast, Jalal;

Fosso, Olav B;

Cabrera, Maria Marta Molinas.

(2022)

Phasor Estimation by EMD-Assisted Prony.

IEEE Transactions on Power Delivery

Vitenskapelig artikkel

-

Xiao, Donghua;

Hu, Haitao;

Chen, Siyi;

Song, Yitong;

Pan, Pengyu;

Cabrera, Maria Marta Molinas.

(2022)

Rapid dq-Frame Impedance Measurement for Three-Phase Grid Based on Interphase Current Injection and Fictitious Disturbance Excitation.

IEEE Transactions on Instrumentation and Measurement

Vitenskapelig artikkel

-

Leng, Minrui;

Sahoo, Subham;

Blaabjerg, Frede;

Cabrera, Maria Marta Molinas.

(2022)

Projections of Cyberattacks on Stability of DC Microgrids - Modeling Principles and Solution.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Wang, Yu;

Guan, Yuanpeng;

Cabrera, Maria Marta Molinas;

Fosso, Olav B;

Hu, Wang;

Zhang, Yun.

(2022)

Open-Circuit Switching Fault Analysis and Tolerant Strategy for Dual-Active-Bridge DC-DC Converter Considering Parasitic Parameters.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Stenwig, Håkon;

Soler, Andres;

Furuki, Junya;

Suzuki, Yoko;

Abe, Takashi;

Molinas, Marta.

(2022)

Automatic Sleep Stage Classification with Optimized Selection of EEG Channels.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Soler, Andres;

Giraldo, Eduardo;

Lundheim, Lars Magne;

Cabrera, Maria Marta Molinas.

(2022)

Relevance-based Channel Selection for EEG Source Reconstruction: An Approach to Identify Low-density Channel Subsets.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

2021

-

Zong, Haoxiang;

Zhang, Chen;

Lyu, Jing;

Cai, Xu;

Cabrera, Maria Marta Molinas.

(2021)

Block Diagonal Dominance-Based Model Reduction Method Applied to MMC Asymmetric Stability Analysis.

IEEE transactions on energy conversion

Vitenskapelig artikkel

-

Debru, Abeba;

Kahsay, Mulu Bayray;

Cabrera, Maria Marta Molinas.

(2021)

ADAMA-II wind farm performance assessment in comparison to feasibility study.

Wind Engineering : The International Journal of Wind Power

Vitenskapelig artikkel

-

Singh, Rajiv;

Padmanaban, Sanjeevikumar;

Dwivedi, Sanjeet;

Cabrera, Maria Marta Molinas;

Blaabjerg, Frede.

(2021)

Cable Based and Wireless Charging Systems for Electric Vehicles:

Technology and control, management and grid integration.

Institution of Engineering and Technology (IET)

Institution of Engineering and Technology (IET)

Lærebok

-

Ojo, Joseph Olorunfemi;

Tsorng-Juu, Peter Liang;

Doolla, Suryanarayana;

Harnefors, Lennart;

Yuen, Ron Hui Shu;

Kawakami, Noriko.

(2021)

A Mustard Seed Planted Years Ago Sprouts and Continues to Grow.

IEEE Journal of Emerging and Selected Topics in Power Electronics

Leder

-

Tian, Bing;

Cabrera, Maria Marta Molinas;

An, Quntao;

Bo, Zhou;

Wei, Jiadan.

(2021)

Freewheeling Current-Based Sensorless Field-Oriented Control of Five-Phase Permanent Magnet Synchronous Motors Under IGBT Failures of a Single Phase.

IEEE transactions on industrial electronics (1982. Print)

Vitenskapelig artikkel

-

Chen, Zhang;

Isobe, Takanori;

Suul, Jon Are Wold;

Dragicevic, Tomislav;

Cabrera, Maria Marta Molinas.

(2021)

Parametric Stability Assessment of Single-Phase Grid-Tied VSCs Using Peak and Average DC Voltage Control.

IEEE transactions on industrial electronics (1982. Print)

Vitenskapelig artikkel

-

Wang, Yu;

Guan, Yuanpeng;

Fosso, Olav B;

Cabrera, Maria Marta Molinas;

Chen, Si-Zhe;

Zhang, Yun.

(2021)

An Input-Voltage-Sharing Control Strategy of Input-Series Output-Parallel Isolated Bidirectional DC/DC Converter for DC Distribution Network.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Singh, Rajiv;

Sanjeevikumar, Padmanaban;

Kumar, Dwivedi Sanjeet;

Cabrera, Maria Marta Molinas;

Blaabjerg, Frede.

(2021)

Cable Based and Wireless Charging Systems for Electric Vehicles: Technology and control, management and grid integration.

Institution of Engineering and Technology (IET)

Institution of Engineering and Technology (IET)

Leksikon

-

Liu, Jinhong;

Cabrera, Maria Marta Molinas.

(2021)

Impact of digital time delay on the stable grid-hosting capacity of large-scale centralised PV plant.

IET Renewable Power Generation

Vitenskapelig artikkel

-

Torres-García, Alejandro A.;

Mendoza-Montoya, Omar;

Cabrera, Maria Marta Molinas;

Antelisc, Javier M.;

Moctezuma, Luis Alfredo;

Hernández-Del-Toro, Tonatiuh.

(2021)

Pre-processing and Feature Extraction.

Faglig kapittel

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2021)

EEG-based subject identification with multi-class classification.

Faglig kapittel

-

Shad, Erwin Habibzadeh Tonekabony;

Cabrera, Maria Marta Molinas;

Ytterdal, Trond.

(2021)

A Two-stage Area-efficient High Input Impedance CMOS Amplifier for Neural Signals.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Shad, Erwin Habibzadeh Tonekabony;

Moeinfard, Tania;

Cabrera, Maria Marta Molinas;

Ytterdal, Trond.

(2021)

A Low-power High-gain Inverter Stacking Amplifier with Rail-to-Rail Output.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Rosa, Paula Bastos Garcia;

Fosso, Olav B;

Cabrera, Maria Marta Molinas.

(2021)

Switching sequences for non-predictive declutching control of wave energy converters.

IFAC-PapersOnLine

Vitenskapelig artikkel

-

Guevara, Andres Felipe Soler;

Drange, Ole;

Furuki, Junya;

Abe, Takashi;

Molinas, Marta Maria Cabrera.

(2021)

Automatic Onset Detection of Rapid Eye Movements in REM Sleep EEG Data.

IFAC-PapersOnLine

Vitenskapelig artikkel

-

Ludvigsen, Sara Lund;

Buøen, Emma Horn;

Guevara, Andres Felipe Soler;

Molinas, Marta Maria Cabrera.

(2021)

Searching for Unique Neural Descriptors of Primary Colours in EEG Signals: A Classification Study.

Lecture Notes in Computer Science (LNCS)

Vitenskapelig artikkel

2020

-

Li, Chendan;

Fosso, Olav B;

Cabrera, Maria Marta Molinas;

Yue, Jingpeng;

Raboni, Pietro.

(2020)

Defining Three Distribution System Scenarios for Microgrid Applications.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Nøland, Jonas Kristiansen;

Cabrera, Maria Marta Molinas.

(2020)

Rotating Power Electronics for Electrical Machines and Drives - Design Considerations and Examples.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Torres-Garcia, Alejandro Antonio;

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2020)

Assessing the impact of idle state type on the identification of RGB color exposure for BCI.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Pettersen, Martine Furnes;

Fosso, Olav B;

Rosa, Paula Bastos Garcia;

Cabrera, Maria Marta Molinas.

(2020)

Effect of non-ideal power take-off on the electric output power of a wave energy converter under suboptimal control.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Ghadikolaei, Jalal Khodaparast;

Fosso, Olav B;

Cabrera, Maria Marta Molinas;

Suul, Jon Are Wold.

(2020)

Stability Analysis of a Virtual Synchronous

Machine-based HVDC Link by Gear's Method.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Naderi, Kebria;

Shad, Erwin Habibzadeh Tonekabony;

Cabrera, Maria Marta Molinas;

Heidari, Ali.

(2020)

A Fully Tunable Low-power Low-noise and High Swing EMG Amplifier with 8.26 PEF.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Shad, Erwin Habibzadeh Tonekabony;

Cabrera, Maria Marta Molinas;

Ytterdal, Trond.

(2020)

A fully differential capacitively-coupled high CMRR low-power chopper amplifier for EEG dry electrodes.

Analog Integrated Circuits and Signal Processing

Vitenskapelig artikkel

-

Føyen, Sjur;

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Fosso, Olav B;

Isobe, Takanori.

(2020)

Single-phase synchronisation with Hilbert

transformers: a linear and frequency independent

orthogonal system generator.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Villanueva, J.;

Lopez, Maximiliano Bueno;

Simón, J.;

Cabrera, Maria Marta Molinas;

Flores, J.;

Mendez, P.E..

(2020)

Application of Hilbert-Huang transform in the analysis of satellite-communication signals.

Revista Iberoamericana de Automática e Informática industrial

Vitenskapelig artikkel

-

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Føyen, Sjur;

Suul, Jon Are Wold;

Isobe, Takanori.

(2020)

Harmonic-Domain SISO Equivalent Impedance Modeling and Stability Analysis of a Single-Phase Grid-Connected VSC.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2020)

Multi-objective optimization for EEG channel selection and accurate intruder detection in an EEG-based subject identification system.

Scientific Reports

Vitenskapelig artikkel

-

Guevara, Andres Felipe Soler;

Gutiérrez, Pablo Andrés Muñoz;

Bueno-Lopez, Maximiliano;

Giraldo, Eduardo;

Cabrera, Maria Marta Molinas.

(2020)

Low-Density EEG for Neural Activity Reconstruction Using Multivariate Empirical Mode Decomposition.

Frontiers in Neuroscience

Vitenskapelig artikkel

-

Guevara, Andres Felipe Soler;

Giraldo, Eduardo;

Cabrera, Maria Marta Molinas.

(2020)

Low-Density EEG for Source Activity Reconstruction using Partial Brain Models.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Sahoo, Subham;

Rana, Rubi;

Cabrera, Maria Marta Molinas;

Blaabjerg, Frede;

Dragicevic, Tomislav;

Mishra, Sukumar.

(2020)

A Linear Regression Based Resilient Optimal Operation of AC Microgrids.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Shad, Erwin;

Molinas, Marta;

Ytterdal, Trond.

(2020)

A Low-power and Low-noise Multi-purpose Chopper Amplifier with High CMRR and PSRR.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Zhang, Chen;

Føyen, Sjur;

Suul, Jon Are Wold;

Molinas, Marta Maria Cabrera.

(2020)

Modeling and Analysis of SOGI-PLL/FLL-based Synchronization Units: Stability Impacts of Different Frequency-feedback Paths.

IEEE transactions on energy conversion

Vitenskapelig artikkel

-

Naderi, Kebria;

Shad, Erwin Habibzadeh Tonekabony;

Molinas, Marta Maria Cabrera;

Heidari, Ali;

Ytterdal, Trond.

(2020)

A Very Low SEF Neural Amplifier by Utilizing a High Swing Current-Reuse Amplifier.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Li, Jing;

Liu, Qiujiang;

Zhai, Yating;

Cabrera, Maria Marta Molinas;

Wu, Mingli.

(2020)

Analysis of Harmonic Resonance for Locomotive and Traction Network Interacted System Considering the Frequency-Domain Passivity Properties of the Digitally Controlled Converter.

Frontiers in Energy Research

Vitenskapelig artikkel

-

Shad, Erwin Habibzadeh Tonekabony;

Cabrera, Maria Marta Molinas;

Ytterdal, Trond.

(2020)

Impedance and Noise of Passive and Active Dry EEG Electrodes: A Review.

IEEE Sensors Journal

Vitenskapelig oversiktsartikkel/review

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2020)

Towards a minimal EEG channel array for a biometric system using resting-state and a genetic algorithm for channel selection.

Scientific Reports

Vitenskapelig artikkel

-

Liu, Jinhong;

Cabrera, Maria Marta Molinas.

(2020)

Impact of inverter digital time delay on the harmonic characteristics of grid-connected large-scale photovoltaic system.

IET Renewable Power Generation

Vitenskapelig artikkel

-

Naderi, Kebria;

Shad, Erwin Habibzadeh Tonekabony;

Cabrera, Maria Marta Molinas;

Heidari, Ali.

(2020)

A Power Efficient Low-noise and High Swing CMOS Amplifier for Neural Recording Applications.

IEEE Engineering in Medicine and Biology Society. Conference Proceedings

Vitenskapelig artikkel

-

Shad, Erwin Habibzadeh Tonekabony;

Naderi, Kebria;

Cabrera, Maria Marta Molinas.

(2020)

High Input Impedance Capacitively-coupled Neural Amplifier and Its Boosting Principle.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Zhang, Chen;

Cai, Xu;

Rygg, Atle;

Cabrera, Maria Marta Molinas.

(2020)

Modeling and analysis of grid-synchronizing stability of a Type-IV wind turbine under grid faults.

International Journal of Electrical Power & Energy Systems

Vitenskapelig artikkel

-

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Føyen, Sjur;

Suul, Jon Are Wold;

Isobe, Takanori.

(2020)

An Integrated Method for Generating VSCs’ Periodical Steady-state Conditions and HSS-based Impedance Model.

IEEE Transactions on Power Delivery

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2020)

Classification of low-density EEG for epileptic seizures by

energy and fractal features based on EMD.

Journal of Biomedical Research (JBR)

Vitenskapelig artikkel

-

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Zheng, Li;

Cai, Xu.

(2020)

Synchronizing Stability Analysis and Region of Attraction Estimation of Grid-Feeding VSCs Using Sum-of-Squares Programming.

Frontiers in Energy Research

Vitenskapelig artikkel

-

Lopez, Maximiliano Bueno;

Sanabria-Villamizar, Mauricio;

Cabrera, Maria Marta Molinas;

Bernal-Alzate, Efrain.

(2020)

Oscillation analysis of low-voltage distribution systems with high penetration of photovoltaic generation.

Electrical engineering (Berlin. Print)

Vitenskapelig artikkel

-

Zong, Haoxiang;

Zhang, Chen;

Lyu, Jing;

Cai, Xu;

Cabrera, Maria Marta Molinas;

Fangquan, Rao.

(2020)

Generalized MIMO Sequence Impedance Modeling and Stability Analysis of MMC-HVDC With Wind Farm Considering Frequency Couplings.

IEEE Access

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2020)

EEG Channel-Selection Method for Epileptic-Seizure Classification Based on Multi-Objective Optimization.

Frontiers in Neuroscience

Vitenskapelig artikkel

-

Tian, Bing;

Cabrera, Maria Marta Molinas;

Moen, Stig;

An, Qun-Tao.

(2020)

High dynamic speed control of the Subsea Smart Electrical Actuator for a Gas Production System.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Tian, Bing;

Sun, Li;

Cabrera, Maria Marta Molinas;

An, Quntao.

(2020)

Repetitive control based phase voltage modulation amendment for FOC-based five-phase PMSMs under single-phase open fault.

IEEE transactions on industrial electronics (1982. Print)

Vitenskapelig artikkel

-

Tian, Bing;

Cabrera, Maria Marta Molinas;

Moen, Stig.

(2020)

2020 IEEE International Conference on Industrial Technology (ICIT).

IEEE (Institute of Electrical and Electronics Engineers)

IEEE (Institute of Electrical and Electronics Engineers)

Vitenskapelig antologi/Konferanseserie

-

Wu, Mingli;

Li, Jing;

Liu, Qiujiang;

Yang, Shaobing;

Cabrera, Maria Marta Molinas.

(2020)

Measurement of Impedance-Frequency Property of Traction Network Using Cascaded H-Bridge Converters: Device Design and On-Site Test.

IEEE transactions on energy conversion

Vitenskapelig artikkel

-

Villanueva, J.;

Lopez, Maximiliano Bueno;

Cabrera, Maria Marta Molinas;

Flores, J;

Mendez, PE.

(2020)

Aplicación de la transformada de Hilbert-Huang en el análisis de señales de comunicación satelital.

Revista Iberoamericana de Automática e Informática industrial

Vitenskapelig artikkel

-

Tarasiuk, Tomasz;

Zunino, Yuri;

Lopez, Maximiliano Bueno;

Silvestro, Federico;

Pilatis, Andrzej;

Cabrera, Maria Marta Molinas.

(2020)

Frequency Fluctuations in Marine Microgrids: Origins and Identification Tools.

IEEE Electrification Magazine

Vitenskapelig artikkel

-

Tian, Bing;

Cabrera, Maria Marta Molinas;

An, Quntao.

(2020)

PWM investigation of a Field-oriented controlled Five-Phase PMSM under two-phase open faults.

IEEE transactions on energy conversion

Vitenskapelig artikkel

-

Nøland, Jonas Kristiansen;

Leandro, Matteo;

Suul, Jon Are Wold;

Cabrera, Maria Marta Molinas.

(2020)

High-Power Machines and Starter-Generator Topologies for More Electric Aircraft: A Technology Outlook.

IEEE Access

Vitenskapelig artikkel

-

Shad, Erwin Habibzadeh Tonekabony;

Moeinfard, Tania;

Cabrera, Maria Marta Molinas;

Ytterdal, Trond.

(2020)

A Power Efficient, High Gain and High Input Impedance Capacitively-coupled Neural Amplifier.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

2019

-

Haring, Mark;

Skjong, Espen;

Johansen, Tor Arne;

Molinas, Marta.

(2019)

Extremum-Seeking Control for Harmonic Mitigation in Electrical Grids of Marine Vessels.

IEEE transactions on industrial electronics (1982. Print)

Vitenskapelig artikkel

-

Rosa, Paula Bastos Garcia;

Cabrera, Maria Marta Molinas;

Fosso, Olav B.

(2019)

Discussion on the mode mixing in wave energy control systems using the Hilbert-Huang transform.

Proceedings of the European Wave and Tidal Energy Conference (EWTEC)

Vitenskapelig artikkel

-

Gasca, Maria Victoria;

Garces, Alejandro;

Cabrera, Maria Marta Molinas.

(2019)

Stability Analysis of the Proportional-Resonant Controller in Single Phase Converters.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Cabrera, Maria Marta Molinas.

(2019)

2019 IEEE Workshop on Power Electronics and Power Quality Applications (PEPQA).

IEEE (Institute of Electrical and Electronics Engineers)

IEEE (Institute of Electrical and Electronics Engineers)

Vitenskapelig antologi/Konferanseserie

-

Tian, Bing;

Cabrera, Maria Marta Molinas;

Moen, Stig;

An, Qun-Tao.

(2019)

Non-filter position sensorless control based on a α-β frame complex PI controller.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Lyu, Jing;

Zong, Haoxiang;

Cai, Xu.

(2019)

Understanding the Nonlinear Behaviour and Synchronizing Stability of a Grid-Tied VSC Under Grid Voltage Sags.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Zong, Haoxiang;

Lyu, Jing;

Zhang, Chen;

Cai, Xu;

Cabrera, Maria Marta Molinas;

Rao, Fangquan.

(2019)

Mimo impedance based stability analysis of DFIG-based wind farm with MMC-HVDC in modifed sequence domain.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Tian, Bing;

An, Qun-Tao;

Cabrera, Maria Marta Molinas.

(2019)

High-Frequency Injection-Based Sensorless Control for a General Five-Phase BLDC Motor Incorporating System Delay and Phase Resistance.

IEEE Access

Vitenskapelig artikkel

-

Zong, Haoxiang;

Lyu, Jing;

Zhang, Chen;

Cai, Xu;

Cabrera, Maria Marta Molinas;

Rao, Fangquan.

(2019)

Modified sequence domain impedance modelling of the modular multilevel converter.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Bueno-Lopez, Maximiliano;

Giraldo, Eduardo;

Cabrera, Maria Marta Molinas;

Fosso, Olav B.

(2019)

The Mode Mixing Problem and its Influence in the Neural Activity Reconstruction.

IAENG International Journal of Computer Science

Vitenskapelig artikkel

-

Alessandro, Boveri;

Silvestro, Federico;

Cabrera, Maria Marta Molinas;

Espen, Skjong.

(2019)

Optimal Sizing of Energy Storage Systems for Shipboard Applications.

IEEE transactions on energy conversion

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2019)

Subject Identification from Low-Density EEG-Recordings of Resting-States: A Study of Feature Extraction and Classification.

Lecture Notes in Networks and Systems

Vitenskapelig artikkel

-

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Rygg, Atle;

Cai, Xu.

(2019)

Impedance-based Analysis of Interconnected

Power Electronics Systems: Impedance

Network Modeling and Comparative Studies of

Stability Criteria.

IEEE Journal of Emerging and Selected Topics in Power Electronics

Vitenskapelig artikkel

-

Nøland, Jonas Kristiansen;

Leandro, Matteo;

Suul, Jon Are Wold;

Cabrera, Maria Marta Molinas;

Nilssen, Robert.

(2019)

Electrical Machines and Power Electronics for Starter-Generators in More Electric Aircrafts: A Technology Review.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Unamuno, Eneko;

Suul, Jon Are Wold;

Cabrera, Maria Marta Molinas;

Barrena, Jon Andoni.

(2019)

Comparative Eigenvalue Analysis of Synchronous

Machine Emulations and Synchronous Machines.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Amin, Mohammad;

Zhang, Chen;

Rygg, Atle;

Cabrera, Maria Marta Molinas;

Unamuno, Eneko;

Belkhayat, Mohamed.

(2019)

Nyquist Stability Criterion and its Application to Power Electronics Systems.

Leksikonartikkel

-

Sang, Shun;

Zhang, Chen;

Cai, Xu;

Cabrera, Maria Marta Molinas;

Zhang, Jianwen;

Rao, Fangquan.

(2019)

Control of a Type-IV Wind Turbine with the Capability of Robust Grid-Synchronization and Inertial Response for Weak Grid Stable Operation.

IEEE Access

Vitenskapelig artikkel

-

Zhang, Chen;

Cai, Xu;

Cabrera, Maria Marta Molinas;

Rygg, Atle.

(2019)

Frequency-domain modelling and stability analysis of a DFIG-based wind energy conversion system under non-compensated AC grids: impedance modelling effects and consequences on stability.

IET Power Electronics

Vitenskapelig artikkel

-

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Rygg, Atle;

Lyu, Jing;

Cai, Xu.

(2019)

Harmonic Transfer-function-based Impedance Modelling of a Three-phase VSC for Asymmetric AC Grids Stability Analysis.

IEEE transactions on power electronics

Vitenskapelig artikkel

-

Sanabria-Villamizar, Mauricio;

Lopez, Maximiliano Bueno;

Cabrera, Maria Marta Molinas;

Bernal, Efrain.

(2019)

Hybrid Technique for the Analysis of Non-Linear and Non-Stationary Signals focused on Power Quality.

IEEE Xplore Digital Library

Vitenskapelig artikkel

-

Rosa, Paula Bastos Garcia;

Brodtkorb, Astrid H.;

Sørensen, Asgeir Johan;

Cabrera, Maria Marta Molinas.

(2019)

Evaluation of wave-frequency motions extraction from dynamic positioning measurements using the empirical mode decomposition.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Guevara, Andres Felipe Soler;

Cabrera, Maria Marta Molinas;

Giraldo, Eduardo.

(2019)

Partial Brain Model For real-time classification of RGB visual stimuli: A brain mapping approach to BCI.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Munoz, Pablo;

Giraldo, Eduardo;

Lopez, Maximiliano Bueno;

Cabrera, Maria Marta Molinas.

(2019)

Automatic Selection of Frequency Bands for Electroencephalographic Source Localization.

International IEEE/EMBS Conference on Neural Engineering

Vitenskapelig artikkel

-

Shad, Erwin Habibzadeh Tonekabony;

Cabrera, Maria Marta Molinas;

Ytterdal, Trond.

(2019)

Modified Current-reuse OTA to Achieve High CMRR by utilizing Cross-coupled Load.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Bergna-Diaz, Gilbert;

Suul, Jon Are Wold;

Berne, Erik;

Vannier, Jean-Claude;

Cabrera, Maria Marta Molinas.

(2019)

Optimal Shaping of the MMC Circulating Currents for Preventing AC-Side Power Oscillations from Propagating into HVdc Grids.

IEEE Journal of Emerging and Selected Topics in Power Electronics

Vitenskapelig artikkel

-

Torres-Garcia, Alejandro Antonio;

Cabrera, Maria Marta Molinas.

(2019)

Analyzing the Recognition of Color Exposure and Imagined Color from EEG Signals.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Maldonado, Juvenal Villanueva;

Lopez, Maximiliano Bueno;

Rodriguez, Jorge Simon;

Cabrera, Maria Marta Molinas;

Troncoso, Jorge Flores;

Monroy, Paul Erick Mendez.

(2019)

Aplicación de la Transformada de Hilbert-Huang en el Análisis de Señales de Comunicación Satelital.

Revista Iberoamericana de Automática e Informática industrial

Vitenskapelig artikkel

-

Monti, Antonello;

Cabrera, Maria Marta Molinas.

(2019)

A Ship Is a Microgrid and a Microgrid Is a Ship: Commonalities and Synergies.

IEEE Electrification Magazine

Leder

-

Corigliano, Silvia;

Mocecchi, Matteo;

Mirbagheri, Mina;

Merlo, Marco;

Cabrera, Maria Marta Molinas.

(2019)

Microgrid design: sensitivity on models and parameters.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Cabrera, Maria Marta Molinas.

(2019)

19th International Conference on Bioinformatics and Bioengineering.

IEEE (Institute of Electrical and Electronics Engineers)

IEEE (Institute of Electrical and Electronics Engineers)

Vitenskapelig antologi/Konferanseserie

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2019)

Sex differences observed in a study of EEG of linguistic activity and resting-state: Exploring optimal EEG channel configurations.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Lopez, Maximiliano Bueno;

Giraldo, Eduardo;

Cabrera, Maria Marta Molinas;

Fosso, Olav B.

(2019)

The mode mixing problem and its influence in the neural activity reconstruction.

IAENG International Journal of Computer Science

Vitenskapelig artikkel

-

Moctezuma, Luis Alfredo;

Cabrera, Maria Marta Molinas.

(2019)

Event-related potential from EEG for a two-step Identity Authentication System.

IEEE Conference on Industrial Informatics

Vitenskapelig artikkel

-

Shad, Erwin Habibzadeh Tonekabony;

Cabrera, Maria Marta Molinas;

Ytterdal, Trond.

(2019)

2019 15th Conference on Ph.D Research in Microelectronics and Electronics (PRIME).

IEEE (Institute of Electrical and Electronics Engineers)

IEEE (Institute of Electrical and Electronics Engineers)

Vitenskapelig antologi/Konferanseserie

-

Åsly, Sara Hegdahl;

Moctezuma, Luis Alfredo;

Gilde, Monika;

Cabrera, Maria Marta Molinas.

(2019)

Towards EEG-based signals classification of RGB color-based stimuli.

Faglig kapittel

-

Maximiliano, Bueno-López;

Andrés, Muñoz-Gutiérrez Pablo;

Eduardo, Giraldo;

Cabrera, Maria Marta Molinas.

(2019)

Electroencephalographic Source Localization based on Enhanced Empirical Mode Decomposition.

IAENG International Journal of Computer Science

Vitenskapelig artikkel

-

Xie, Xiarong;

Wang, Xiongfei;

Hu, Jiabing;

Xu, Lie;

Bongiorno, Massimo;

Miao, Zhixin.

(2019)

Guest Editorial: Oscillations in Power Systems with High Penetration of Renewable Power Generations.

IET Renewable Power Generation

Leder

-

Skjong, Espen;

Johansen, Tor Arne;

Cabrera, Maria Marta Molinas.

(2019)

Distributed control architecture for real-time model predictive control for system-level harmonic mitigation in power systems.

ISA transactions

Vitenskapelig artikkel

-

Leandro, Matteo;

Bianchi, Nicola;

Cabrera, Maria Marta Molinas;

Ummaneni, Ravindra Babu.

(2019)

Low Inductance Effects on Electric Drives using Slotless Permanent Magnet Motors: A Framework for Performance Analysis.

Vitenskapelig Kapittel/Artikkel/Konferanseartikkel

-

Amin, Mohammad;

Cabrera, Maria Marta Molinas.

(2019)

A Gray-Box Method for Stability and Controller Parameter Estimation in HVDC-Connected Wind Farms Based on Nonparametric Impedance.

IEEE transactions on industrial electronics (1982. Print)

Vitenskapelig artikkel

-

Guevara, Andres Felipe Soler;

Giraldo, Eduardo;

Cabrera, Maria Marta Molinas.

(2019)

DYNLO: Enhancing Non-linear Regularized State Observer Brain Mapping Technique by Parameter Estimation with Extended Kalman Filter.

Lecture Notes in Computer Science (LNCS)

Vitenskapelig artikkel

-

Tian, Bing;

Cabrera, Maria Marta Molinas;

Moen, Stig;

An, Qun-Tao.

(2019)

Non-filter position sensorless control based on a α-β frame complex PI controller.

IEEE (Institute of Electrical and Electronics Engineers)

IEEE (Institute of Electrical and Electronics Engineers)

Vitenskapelig antologi/Konferanseserie

-

Zong, Haoxiang;

Lyu, Jing;

Cai, Xu;

Zhang, Chen;

Cabrera, Maria Marta Molinas;

Rao, Fangquan.