In the SoftICE lab, we have had several bachelor projects over the years that have examined how to use inexpensive commercial off-the-shelf (COTS) electroencephalography (EEG) equipment to enable brain control in virtual environments. Specifically, we have been using the scientific version of the Emotiv Epoc EEG headset, which has 14 sensors that measure raw EEG signals on top of the human scalp. These signals can be filtered (converted) in real-time to suitable control signals via the Emotiv software and passed on to virtual environments in the 3D game engine Unity, thus enabling real-time control of objects and characters in a virtual world only by the use of brain waves.

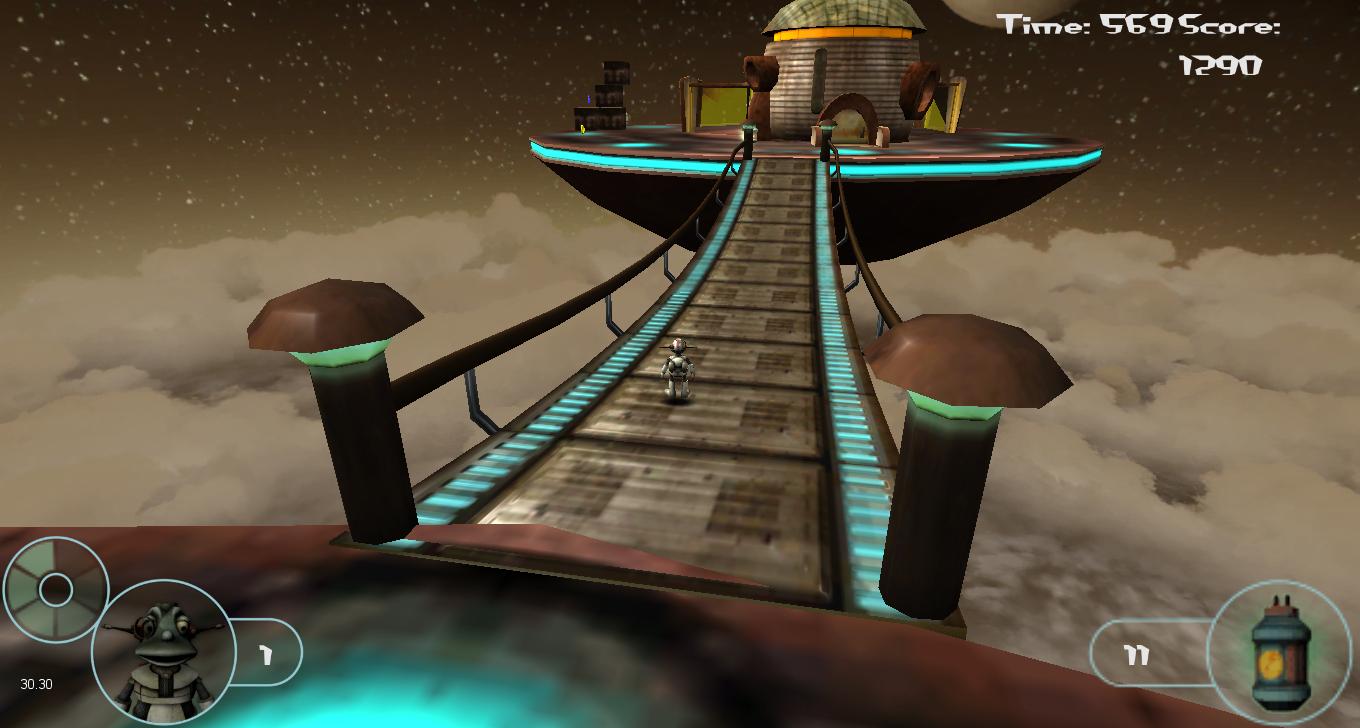

In the first bachelor project we ran as early as 2011, our students were able to demonstrate a proof of concept by developing an interface between readings from the EEG headset and a demo game in Unity called Lerpz Escapes. After some training sessions for finetuning of personal Emotiv control profiles (the Emotiv control software needs to ‘learn’ the EEG signals of each individual user), the students were able to control a 3D third-person character in the computer game only by using their mind.

A YouTube video demonstrating the results is shown below:

This year, we have had two bachelor projects going one step further from this initial work.

The first project was made by a group consisting of students Fredrik Hoel Helgesen, Rolf-Magnus Hjørungdal, and Daniel Nedregård, and was supervised by AAUC staff Robin T. Bye and Anders Sætersmoen, with additional insights provided by staff members Filippo Sanfilippo and Hans Georg Schaathun. The students used Unity to develop a virtual reality environment that can serve as a training platform for controlling a motorised wheelchair only by means of brain waves (EEG). Their work was inspired by patients who suffer from amyotrophic lateral sclerosis (ALS), which is also known as Lou Gehrig’s disease, and therefore gradually become completely paralysed and unable to control conventional electric wheelchairs using their hands or chin. Following a set of training sessions, users develop their brain control skills and are able to control a motorised wheelchair in realistic virtual environments with streets, buildings, pedestrians, trees, and so on.

The group also did some preliminary work using artificial neural networks to map the neural EEG signals to appropriate motor commands as well as examine using the Oculus Rift for virtual reality.

The source code is freely available on GitHub. The usual standards for citing, using and modifying scientific intellectual property apply.

A YouTube video demonstrating the results is show below:

The second project was made by international exchange student Tom Verplaetse (originally at University College Ghent, Belgium) and supervised by AAUC staff Robin T. Bye and Filippo Sanfilippo. Tom examined how one can use EEG control as a new rehabilitation technique for stroke victims who have lost the ability to move a single hand or both of their hands, a condition called partial paraplegia. Partial paraplegia can be healed by months or sometimes years of physical therapy and other therapies, and developing new rehabilitation techniques is an active field of research worldwide. In the work of Tom, the idea was to create a 3D environment in which the rehabilitating patient can move a visual representation of the paraplegic hand, thus achieving the same effect as that of mirror therapy. Mirror therapy relies on the ability to trick the brain into thinking it can move a hand that is not really there but is merely a visual representation.

The software developed in this project provides a 3D representation of that hand and lets the brain control it by using its own brain waves. Clever use of visual stimulation at specific frequencies by means of a flickering light led to steady state visually evoked potentials (SSVEP) that clearly enhanced both alpha and beta EEG activity.

Hopefully, this process of brain pattern recognition and brain activation of the specific regions needed for motor function could lead to a faster and more efficient rehabilitation process, without much need of expensive equipment or human helpers such as physioterapeuts or nurses.

Source code can be obtained upon request.

A YouTube video demonstrating the results is shown below:

For more information, please contact SoftICE member Robin T. Bye.