Within the field of medical technology, the CPS Lab has the following main focus area: Computer Haptic-Assisted Orthopaedic Surgery (CHAOS).

We also do research on neuroengineering, as described separately below.

The following CPS members are involved in medical technology research:

- Webjørn Rekdalsbakken, associate professor, IIR (research area coordinator until end of 2020)

- Hans Georg Schaathun, professor, IIR (research area coordinator from 2021)

- Robin T. Bye, associate professor, IIR

- Ottar L. Osen, associate professor, IIR

- Kjell-Inge Gjesdal, associate professor II, IIR

- Kai Erik Hoff, assistant professor, IIR

- Aleksander Skrede, PhD candidate, IIR

- Øystein Bjelland, PhD candidate, IIR

- N.N., PhD candidate, IIR

Alumni:

- N. Varatharajan (Rajan), postdoc, IIR, funded by ERCIM

- Jørgen André Sperre, MSc in simulation and visualization

- Li Lu, MSc in simulation and visualization

- Martin Pettersen, MSc in simulation and visualization

- Eirik Gromholt Homlong, MSc in simulation and visualization

Please contact Webjørn Rekdalsbakken for general inquiries about Medical Technology, or Robin T. Bye for inquiries on CHAOS and neuroengineering research.

CHAOS

Our work on CHAOS and biomechanics involves the use of robotic testing of human joints before and after orthopaedic surgery operations. Together with Ålesund hospital, the CPS Lab has established a Biomechanics Laboratory with a Kuka KR 6 R900 sixx industrial robot installed (autumn 2018).

The lab was officially opened 6 March 2019, and has got media attention since then:

- Sunnmørsposten: Åpner unik ledd-lab på Ålesund sjukehus

- Sunnmørsposten: Nytt forskningsprosjekt skal utvikle en operasjons-simulator

- Helse MR – nyheiter: Opna lab med knokkelrobot

- Helse MR – fag og forskning: Robotiserte knoklar

Combined with a high quality force sensor, the robot can be used for high-fidelity testing of the forces involved in movements of human joints such as the shoulder or the knee. The lab will be used for the following:

- testing and providing insight into the effect of a number of orthopaedic procedures

- construction of kinematic and anatomical models of human joints

- development of algorithms for robot planning and control

- exploration of computer vision for robot control

Moreover, we are exploring modelling and design of patient-adapted orthopaedic braces for bone fractures in the arm.

In addition, the CPS Lab has an ongoing cooperation with Sunnmøre MR-klinikk (a clinic for MR imaging) with access to a state-of-the-art MR machine, which will be used for the following:

- MR image segmentation and classification using advanced image processing techniques, machine learning, and other relevant technology

- construction of anatomical and physiological models of human joints and body parts

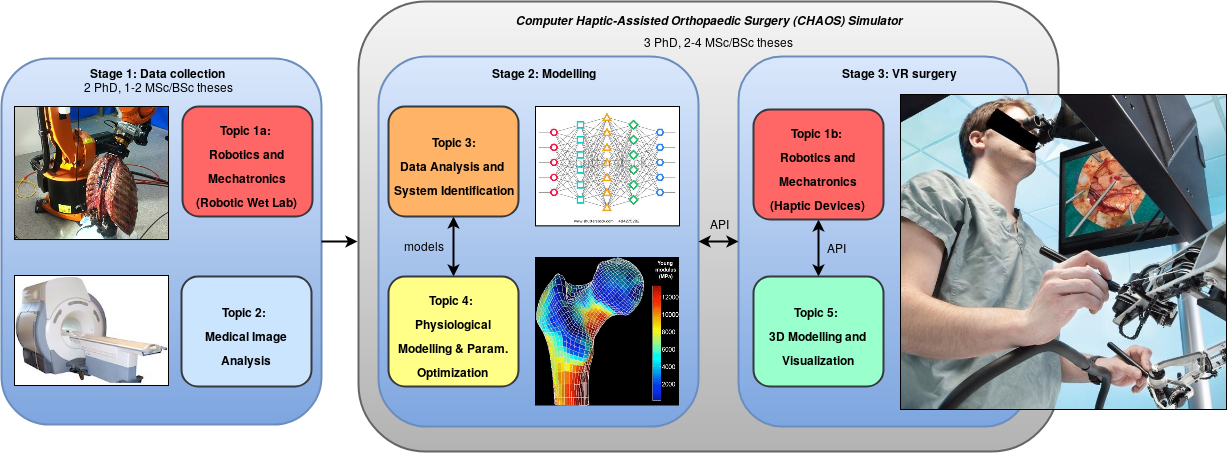

Our long-term term goal is to build a VR/AR simulator for computer haptic-assisted orthopaedic surgery (CHAOS) that can be used for patient-customised training of orthopaedic surgeons and exploration of new orthopaedic surgery procedures, as depicted in the figure below:

Neuroengineering

In the field of neuroengineering, we have explored the mechanisms involved in the human motor-sensory loop. Modelling the human brain as a motor control system based on fundamentals from cybernetics, signal processing, mathematics, etc., we have constructed biologically-feasible models able to explain many of the physiological phenomena involved in human movements, including speed-accuracy tradeoffs, velocity profiles, and physiological 10 Hz tremor in aimed movements (see the Publications of CPS Lab member Robin T. Bye).

We have also explored the potential for using EEG brain waves for “mind control” and communication, possibly with the addition of EMG. Interfacing EEG signals with the Unity 3D game engine or other sofware, we have constructed prototypes of mind control systems for stroke rehabilitation, electric wheelchairs, and text messages, e.g., see EEG brain control for ALS and stroke patients.

In the first bachelor project we ran as early as 2011, our students were able to demonstrate a proof of concept by developing an interface between readings from the EEG headset and a demo game in Unity called Lerpz Escapes. After some training sessions for finetuning of personal Emotiv control profiles (the Emotiv control software needs to ‘learn’ the EEG signals of each individual user), the students were able to control a 3D third-person character in the computer game only by using their mind.

A YouTube video demonstrating the results is shown below:

In 2016, students used Unity to develop a virtual reality environment that can serve as a training platform for controlling a motorised wheelchair only by means of brain waves (EEG). Their work was inspired by patients who suffer from amyotrophic lateral sclerosis (ALS), which is also known as Lou Gehrig’s disease, and therefore gradually become completely paralysed and unable to control conventional electric wheelchairs using their hands or chin. Following a set of training sessions, users develop their brain control skills and are able to control a motorised wheelchair in realistic virtual environments with streets, buildings, pedestrians, trees, and so on.

The students also did some preliminary work using artificial neural networks to map the neural EEG signals to appropriate motor commands as well as examine using the Oculus Rift for virtual reality.

The source code is freely available on GitHub. The usual standards for citing, using and modifying scientific intellectual property apply.

A YouTube video demonstrating the results is show below:

In another project from 2016, software was developed for providing a 3D representation of a paralyzed hand and letting the brain control it by using its own brain waves. Clever use of visual stimulation at specific frequencies by means of a flickering light led to steady state visually evoked potentials (SSVEP) that clearly enhanced both alpha and beta EEG activity. Hopefully, this process of brain pattern recognition and brain activation of the specific regions needed for motor function could lead to a faster and more efficient rehabilitation process, without much need of expensive equipment or human helpers such as physioterapeuts or nurses. Source code can be obtained upon request.

A YouTube video demonstrating the results is shown below: